Did you ever get lost while reading a book, living through the characters and the events, being transported over the course of a story in a foreign world? What if such written universe could evolve depending on you; the text reacting discretely when your heart is racing for of paragraph of action, or when your breath is taken away by the unexpected revelation of a protagonist?

This is a project about an interactive fiction fueled by physiological signals that we hereby add to our stash. While there was hints of a first prototype published four years prior, the current version is the result of a collaboration with the Magic Lab laboratory from Ben Gurion University, Israel. We published at CHI ’20 our paper entitled “Physiologically Driven Storytelling: Concept and Software Tool”. We received there a “Best Paper Honorable Mention”, awarding the top 5% of the submissions — references and link to the original article at the bottom.

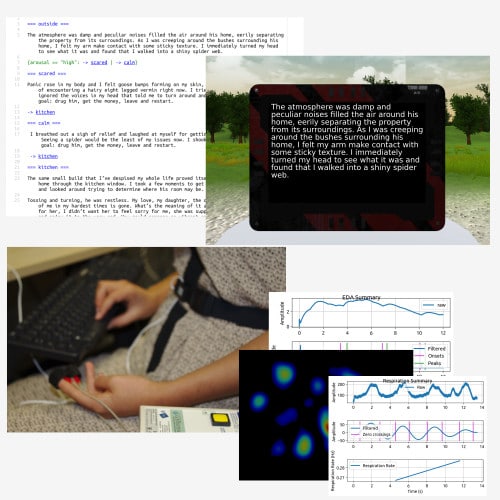

Beyond the publication and the research, we wish to provide to writers and readers alike a new form of storytelling. Thanks to the “PIF” engine (Physiological Interactive Fiction), it is now possible to write stories which narrative can branch in real time depending of signals such as breathing, perspiration or pupils dilatation, among others. To do so, the system combines a simplified markup language Ink, a video-game rendering engine (Unity) and a robust software to process in real time physiological signals (OpenViBE). Depending on the situation, physiological signals can be acquired with a laboratory equipment, as well as with off-the-shelf devices like smartwatches or… with Ullo’s own sensors.

Interactive fiction’s origin story takes place in the late 70s, a time during which “Choose Your Own Adventures” books (and alike) emerged alongside video games that were purely textual. In the former one has to turn to a specific page depending on the choice made for the character; in the latter, precursors to adventures games, players have to type on the keyboard simple instructions alike OPEN DOOR or GO NORTH to advance in the story — one of the most famous game: Zork, by Infocom. (Zork, that I [Jeremy] must confess never being able to finish, unlike jewels such as A Mind Forever Voyaging or Planetfal, developped by the same company). More detailed story in the paper. Here to explicit interaction from the reader we substitute implicit interaction, relying on the physiology, with a pinch of machine learning to understand signals’ evolution depending on the context. Transparent for the reader, and no need to wield a programming language complex to learn for the writer, but a light syntax quick and easy to grasp.

If the vision — which is not without resemblance to elements that one can find in science-not-so-fiction-anymore work such as The Ender’s Game or The Diamond Age — is ambitious, the project is still in its infancy. Yet, two studies on the menu for the first published full paper. In one we investigate the link between, on the one hand, proximity of a story with the reader and, on the other hand, empathy toward the character. In the other study we look at which information physiological signals can bring about the reader, with first classification scores on constructs related to emotions, attention, or to the complexity of the story. From there a whole world is to explore, with long-term measures and more focused stories. One of the scientific objectives we want to carry on is to understand how this technology could favor empathy: for example opening-up readers perspectives by helping them to better encompass the point of view of a character which seems definitely too foreign at first. One lead among many, and on the way awareness about all the different (mis)usages.

Beside this more fundamental research, during project’s next phase we expect to organize workshops around the tool. If you are an author boiling with curiosity, whether established or hobbyist — and not necessarily kin on new tech –, don’t hesitate to reach for us to try it out. We are also looking to build a community around the open-source software we developed, contributors are welcomed!

On the technical side, next we won’t deny ourselves the pleasure of integrating devices such as the Muse 2 for a drop of muscular and brain activity, or exploring virtual reality rendering (first visuals with the proof of concept “VIF” http://phd.jfrey.info/vif/), or creating narratives worlds shared among several readers.

For more information, to keep an eye on the news related to the project, or to get acquainted with the (still rough) source code, you can visit the dedicated website we are putting up: https://pif-engine.github.io/.

Associated publications

Jérémy Frey, Gilad Ostrin, May Grabli, Jessica Cauchard. Physiologically Driven Storytelling: Concept and Software Tool. CHI ’20 – SIGCHI Conference on Human Factors in Computing System, Apr 2020, Honolulu, United States. 🏆 Best Paper Honorable Mention. ⟨10.1145/3313831.3376643⟩. ⟨hal-02501375⟩. PDF

Gilad Ostrin, Jérémy Frey, Jessica Cauchard. Interactive Narrative in Virtual Reality. MUM 2018 Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia, Nov 2018, Cairo, Egypt. pp.463-467, ⟨10.1145/3282894.3289740⟩. ⟨hal-01971380⟩. PDF

Jérémy Frey. VIF: Virtual Interactive Fiction (with a twist). CHI’16 Workshop – Pervasive Play, 2016. ⟨hal-01305799⟩. PDF