This project started as a(nother) collaboration with the Potioc research team, and the then-post-doc Joan Sol Roo. Through this projects we wanted to address some of the pitfalls related to tangible representations of physiological states.

At this point we had been working approximately 8 years on the topic, creating, experimenting with and teaching about devices using physiological signals; while at the same time exchanging with various researchers, designers, artists, private industries, enthusiasts or lay users. We started have a pretty good idea of the various frictions points. Among the main issues we encountered: building devices is hard, even more so when they should be used outside of the lab, or given to novices. We started to work more on electronics because of that, for example relying more on embedded displays instead of spatial augmented reality, but we wanted to go one step further and explore a modular design, that people could freely manipulate and customize. We first started to wonder what basic “atoms” were necessary to be able to recreate our past projects. Not so many it appeared. Most projects boil down to a power source, a sensor, some processing, an output, no more. Various output can be aggregated to give multi-modal feedback. Communication can be added, to send data to or receive from another location, as with Breeze. Data can be recorded or replayed. Some special form of processing can occur to fusion multiple sensors (e.g. extract an index of cardiac coherence) or to measure the synchrony between several persons, as with Cosmos. And this is it, we have or sets of atoms, or bricks, that people can assemble in various way, to redo or create new devices. Going tangible always comes with a trade-off in terms of flexibility or freedom as compared to digital or virtual (e.g. it is harder and more costly to duplicate an item), but it also brings invaluable features, with people more likely to manipulate, explore and tinker with physical objects (there is more to the debate; for another place).

We are of course not the firsts to create a modular toolkit; many projects and products provide approach to explore electronics or computer science, and the archetypal example — that is also a direct inspiration –, comes from the Lego bricks themselves. However we push for such form factor in the realm of physiological computing. More importantly, we use the properties of such a modular design to answer to other issues pertaining to biofeedback applications: how to ensure that the resulting applications empower users and do not enslave them?

Throughout the project, we aimed at making possible to interact with the artifacts under the same expectations of honest communication that occur between people, based on understanding, trust, and agency.

- Understanding: Mutual comprehension implies a model of your interlocutor’s behavior and goals, and a communication protocol understand- able by both parts. Objects should be explicable, a property that is facilitated when each one performs atomic and specific tasks.

- Trust: To ensure trust and prevent artifacts to appear as a threat, their behavior must be consistent and verifiable, and they should perform only explicitly accepted tasks for given objectives. Users should be able to doubt the inner workings of a device, overriding the code or the hardware if they wish to do so.

- Agency: As the objective is to act towards desirable experiences, control and consent are important (which cannot happen without understanding and trust). Users should be capable to disable undesired functionalities, and to customize how and to whom the information is presented. Objects should be simple and inexpensive enough so that users can easily extend their behavior.

Coral was created (or “blobs”, “totems”, “physio-bricks”, “physio-stacks”… names were many) to implement those requirements. In particular, bricks were made:

- Atomic: each brick should perform a single task.

- Explicit: each task should be explicitly listed.

- Explicable: the inner behavior of an element should be understandable.

- Specific: each element capabilities should be restricted to the desired behavior, and unable to perform unexpected actions.

- Doubtable: behaviors can be checked or overridden.

- Extensible: new behaviors should be easily supported, providing forward compatibility.

- Simple: As a mean to achieve the previous items, simplicity should be prioritized.

- Inexpensive: to support the creation of diverse, specific elements, each of them should be rather low cost.

For example, in order to keep the communication between atoms Simple and Explicable, we opted for analog communication. Because no additional meta-data is shared outside a given atom unless explicitly stated, the design is Extensible, rendering possible to create new atoms and functionality, similar to the approach used for

modular audio synthesis. A side effect of analog communication is its sensitivity to noise: we accepted these as we consider the gain in transparency is worth it. It can be argued that this approach leads to a naturally degrading signal (i.e. a “biodegradable biofeedback”), ensuring that the data has limited life span and thus limiting the risk that it could leak outside its initial scope and application. Going beyond, in order to explicitly inform users, we established labels to notify

them what type of “dangerous” action the system is capable of performing. A standard iconography was chosen to represent basic functions (e.g. floppy disk

for storage, gears for processing, waves for wireless communication, …). We consider that, similar to food labeling that is being enforced in some countries, users should be aware of the risks involved for their data when they engage with a device, and thus being able to make an informed decision. On par with our objectives, everything is meant to be opoen-source, from the code to the schematics to the instructions — we just have to populate the holder at https://ullolabs.github.io/physio-stacks.docs/.

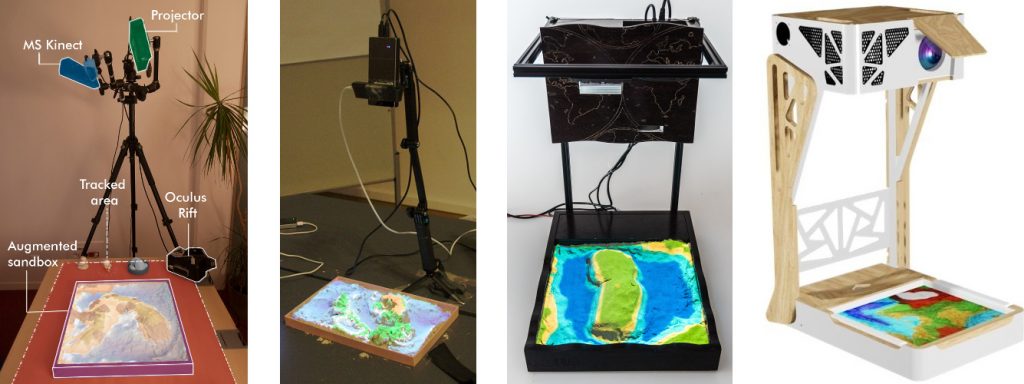

Over the last two years [as of 2021] we already performed several tests with Coral, on small scales, demoing and presenting them to researchers, designers or children (more details in Mobile HCI ’20 paper below). The project is also moving fast in terms of engineering, with the third iteration of the bricks, now easier to use and to craft (3D printing, soldering iron, basic electric components). While the first designs were based on the idea of “stacking” bricks, the latter ones explore the 2D space, more alike building tangrams.

v1, stacking

v2, Coral

v3, pogo connectors

This tangible, modular approach enable the construction of physiological interfaces that can be used as a prototyping toolkit by designers and researchers, or as didactic tools by educators and pupils. We are actively working with our collaborators toward producing a version of the bricks that could be used in class, to combine teaching of STEM-related disciplines to benevolent applications that could favor interaction and bring well-being.

We are also very keen interface the bricks with existing devices. Since the communication between bricks is analog it is directly possible to interact with standard controllers such as the Microsoft Xbox Adaptive controller (XAC), to play existing games with our physiological activity, or to interact with analog audio synthesis.

Coral connected to Microsoft XAC

Coral connected to Behringer Neutron audio synthesizer

Our work was presented at the Mobile HCI ’20 conference, video below for a quick summary of the research:Our work was presented at the Mobile HCI ’20 conference, video below for a quick summary of the research:

Contributors

In the spirit of honest and transparent communication, here is the list of past and current contributors to the project (by a very rough order of appearance):

Joan Sol Roo: Discussion, Concept, Fabrication, Applications Writing (v1)

Jérémy Frey: Discussion, Concept, Applications, Writing, Funding (v1, v2, v3)

Renaud Gervais: Early concept (v1)

Thibault Lainé: Discussion, Electronics and Fabrication considerations (v1)

Pierre-Antoine Cinquin: Discussion, Human and Social considerations (v1)

Martin Hachet: Project coordination, Funding (v1)

Alexis Gay: Scenarios (v1)

Rémy Ramadour: Electronics, Fabrication, Applications, Funding (v2, v3)

Thibault Roung: Electronics, Fabrication (v2, v3)

Brigitte Laurent: Applications, Scenarios (v2, v3)

Didier Roy: Scenarios (v2)

Emmanuel Page: Scenarios (v2)

Cassandra Dumas: Electronics, Fabrication (v3)

Laura Lalieve: Electronics, Fabrication (v3)

Sacha Benrabia: Electronics, Fabrication (v3)

Associated Publications

Joan Sol Roo, Renaud Gervais, Thibault Lainé, Pierre-Antoine Cinquin, Martin Hachet, Jérémy Frey. Physio-Stacks: Supporting Communication with Ourselves and Others via Tangible, Modular Physiological Devices. MobileHCI ’20: 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services, Oct 2020, Oldenburg / Virtual, Germany. pp.1-12, ⟨10.1145/3379503.3403562⟩. ⟨hal-02958470⟩. PDF